This article is a transcript of our recent webinar featuring Manson Chen (CEO, Sovran, ex-Calm, ex-Cash app) and Andrey Shaktin discussing creative testing strategies. The session covers everything from creative testing fundamentals to advanced optimization techniques for web-to-app campaigns.

Explore the detailed transcript below, where we break down key insights, practical tips, and expert answers to the most pressing questions about creative testing for web-to-app. You can also watch the webinar recording, which you’ll find it at the end of the article.

State of creative testing for web-to-app

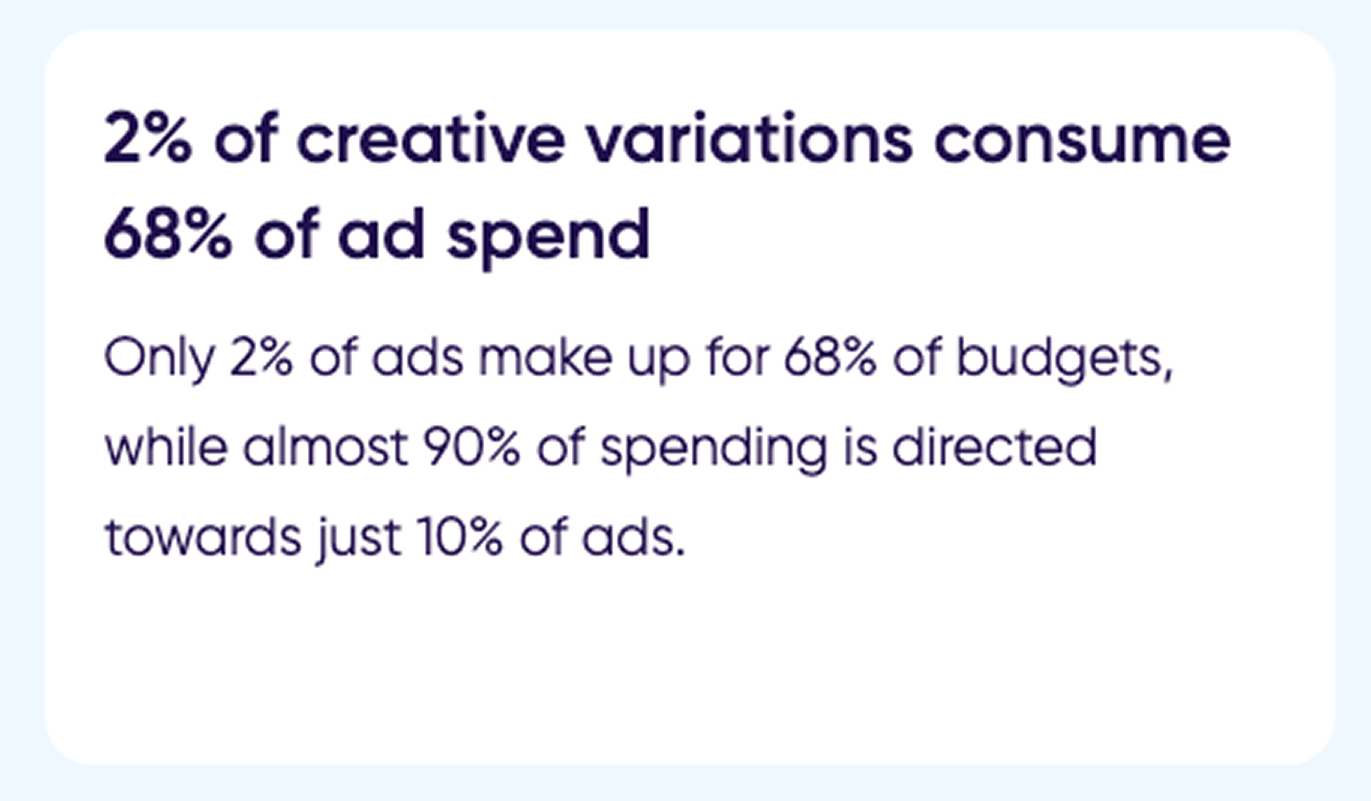

Finding unicorn ads is tough. This study from AppsFlyer came out earlier this year, and they found that just 2% of ad variations account for 68% of spend. On top of that, 90% of the budget is directed toward just 10% of ads. But here’s the catch with this data – they already excluded ads with less than $50 in spend.

In reality, a lot fewer than one out of 50 ads even make it to this level. To have just two top-performing creatives, you’d need to test almost 100 creatives. That’s the reality we’re in.

This next piece of data is from Facebook. They found that the fastest-growing advertisers tested 11 times more ads per month. That’s 45 versus just 4. They also discovered that the average variance in ROAS (Return on Ad Spend) between top- and bottom-performing creatives was 11x.

Recent data from Veros, analyzing 5,000 Meta accounts, shows:

- Advertisers spending $100,000 to $500,000 per month averaged 335 ads with over 20 impressions

- Those spending $500,000 to $1,000,000 had 422 active ads

- Smaller advertisers ($10,000 to $100,000 monthly) averaged 103 ads

It’s a chicken-and-egg situation – you can’t really grow unless you test a lot of ads, but testing requires a budget.

Creative trends for web-to-app

1. Lo-Fi ads

These ads are intentionally unpolished and don’t look like traditional ads, which is a big reason why they perform so well.

Lo-Fi ads are:

- Unpolished

- Doesn’t look like an ad

Examples:

- Post-it notes

- Writing on a whiteboard

- iMessage, Slack messages, or Google Hangouts screenshots

- The latest trend involves stickers or secretive messaging, like “We are looking for women…” or “A giant secret to lose weight.” These are great examples of engaging formats.

2. AI UGC (user-generated content)

Tools:

- Arcads.ai

- Poolday.ai

- Creatify.ai

- Captions.ai

- TikTok Creative Symphony

- CapCut

- HeyGen

Key aspects:

- Low cost of production

- Can be polarizing but effective

- Natural, authentic feel

- Approximately 10-20% of content is AI-generated

- Efficient testing and iteration potential

3. AI statics

Primary tools:

- Midjourney

- FLUX

Pro tip: Utilize Replicate (replicate.com) for open-source text-to-image models. You can create a simple API call to generate hundreds of images at once. After that, use Canva to add text overlays, making the process much more efficient than using MidJourney’s Discord interface.

4. AI video

Current leader: Runway Gen-3

Innovative approaches:

- AI visual hooks leading into consistent body content

- Modular testing capabilities

- Example: BetterMe’s testing of AI-generated visual hooks with consistent main content

- Efficient way to test multiple variations while maintaining core messaging

5. Podcast-style ads

Examples from Obvi and Headway show this trend. The Headway ad even uses an AI voice. What’s great is that it doesn’t look like a traditional ad, playing into the authenticity factor.

Key characteristics:

- ‘Authentic’ presentation

- Doesn’t look like traditional advertising

- Leverages podcast format’s trustworthiness

What makes them effective:

- Natural integration with content

- Higher perceived trustworthiness

- Alignment with U.S. podcast consumption habits

Foundations of successful creative testing for web-to-app

1. Set up conversion API

If you’re relying solely on the pixel, you’re likely losing around 15% of your conversion data. By implementing the Conversion API, you provide Facebook with more robust signals, allowing better algorithmic ad delivery.

Critical benefits:

- Recovers ~15% of conversion data lost through pixel-only tracking

- Provides Facebook with more robust signals

- Enhances algorithm performance

- Improves pixel distribution

- Better optimization capabilities

2. Create high volume of ad variations

As the CMO of Facebook explained on an eMarketer podcast: you need a high volume of ad variations and should let your audience “vote” on the best ones. Bottom-performing ads won’t receive much spend, while top performers will.

Keeping production costs low is crucial for performance testing. Most ads won’t hit your benchmarks, so affordable production increases your chances of finding winners.

Strategy components:

- Let audience engagement determine winners

- Natural elimination of poor performers

- Efficient budget allocation to top performers

- Continuous testing and iteration

Cost considerations:

- Keep production costs low

- Enable higher testing volume

- Increase chances of finding winners

- Budget efficiency through scale

3. Never stop testing

One of the biggest mistakes brands make is finding a winning ad and then stopping. As you increase spend, winning ads will fatigue. You need a steady pipeline of new ads to replace fatigued ones.

Common mistakes to avoid:

- Relying on single winning ads

- Stopping testing after success

- Ignoring creative fatigue

Best practices:

- Maintain constant testing

- Build creative pipeline

- Prepare for ad fatigue

- Scale systematically

Research strategies for creative testing

Audience language research

A great tactic: Upload reviews to ChatGPT and extract concise five-star reviews for Facebook ads. When at Calm, we tested 50 different testimonials, identified the top 3-5, and used those consistently in video ads.

Use tools like GigaBrain to understand what people say about your brand online. Explore Reddit threads to capture the specific language people use.

Ad library inspiration

Brands to watch:

- Headway

- Rise

- BetterMe

- Calm

- Noom

Visual hooks strategy

Some visual hooks are intentionally absurd – and that’s what makes them effective. Don’t be afraid to loosen brand guidelines. Examples include pouring sugar into coffee or dunking a burrito in sauce.

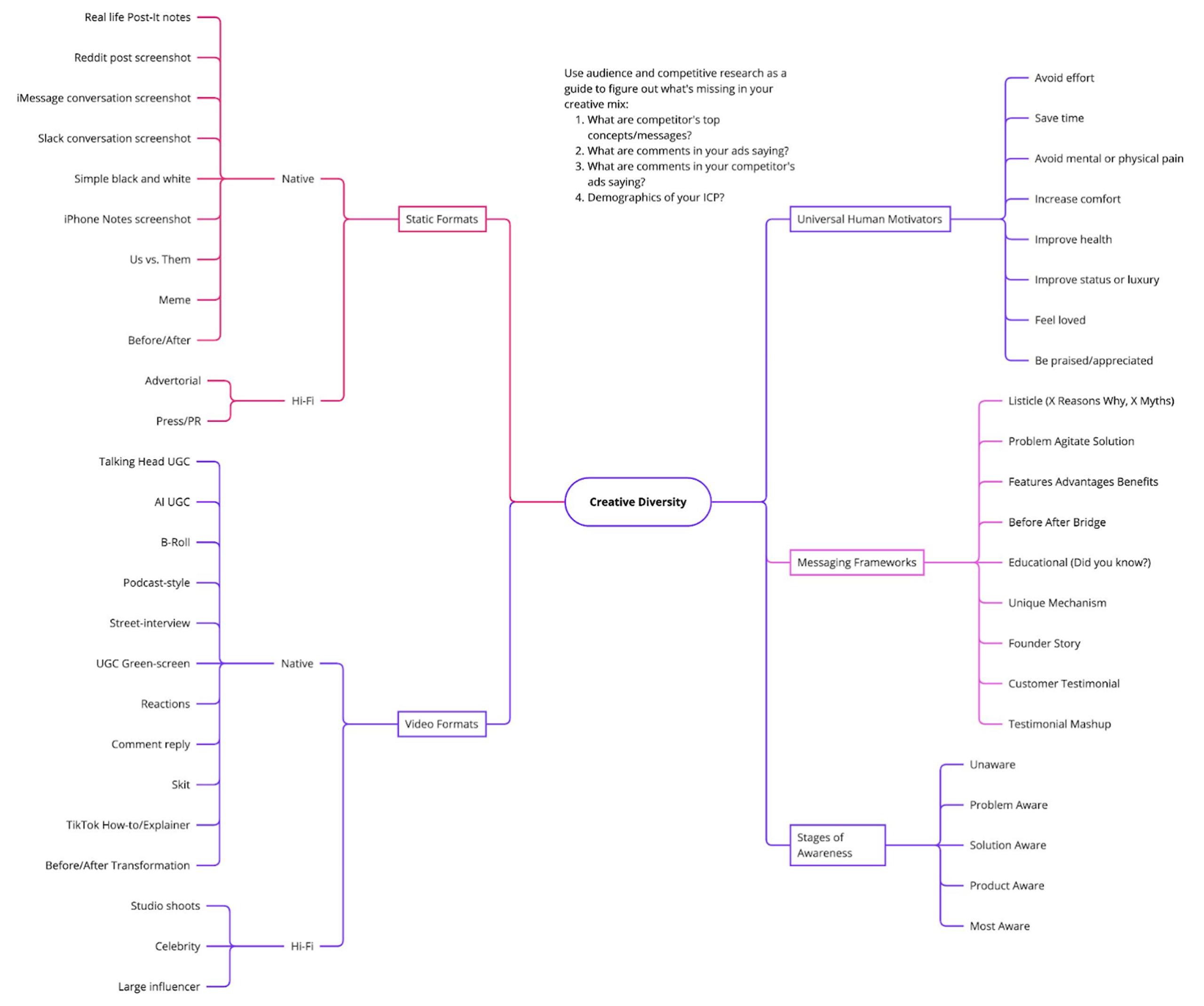

Creative diversity framework

This mind map represents our perspective on creative diversity, touching on universal human motivators (top right). If you’ve read any copywriting books, you’ll recognize these motivators – they’re often used to shape angles in advertising.

Start with the motivators in the top right and then look at the stages of awareness:

- Unaware

- Problem-aware

- Solution-aware

- Product-aware

- Most aware

For instance, if you’re targeting the bottom of the funnel, static ads featuring testimonials are highly effective. At the top of the funnel, video ads with problem-solution scripts tend to perform well.

Messaging frameworks

To refine your messaging, you can test various frameworks, such as:

- Listicle styles: For example, “Three Reasons Why…”

- Common myths: Debunking or addressing misunderstandings

- Problem-agitate-solution: A classic but proven method

Once you’ve nailed down the message and angle, you can adapt it across multiple formats.

Expanding formats

Here’s how you can scale your message:

- Static ad formats: Incorporate creative elements like post-it notes, Reddit post screenshots, or iMessage conversation screenshots

- Video formats: Leverage UGC (user-generated content), including talking-head styles and narrative-driven ads

This approach to creative diversity allows for flexibility and thorough testing.

Testing and iteration

Creative diversity is all about testing different combinations of concepts and messages until you identify what resonates. When something works, double down on it. Refine and scale it further. The nitty-gritty of creative testing involves setting up separate campaigns for testing specific ideas.

Testing methodology

When testing creative diversity:

- Test one element at a time to clearly identify what works

- Keep track of performance by concept type

- Document insights about which combinations perform best

- Be systematic in your approach to testing

- Maintain a balance between new concepts and iterations

Remember: Success in creative diversity comes from systematic testing combined with careful analysis of results. Don’t be afraid to try unexpected combinations – sometimes the most surprising concepts deliver the best results.

Integration with overall strategy

Creative diversity should align with your:

- Customer journey mapping

- Funnel stages

- Campaign objectives

- Platform-specific requirements

- Brand guidelines (while allowing for flexibility in testing)

The goal is to find the right balance between maintaining brand consistency and exploring new creative territories that might resonate with your audience.

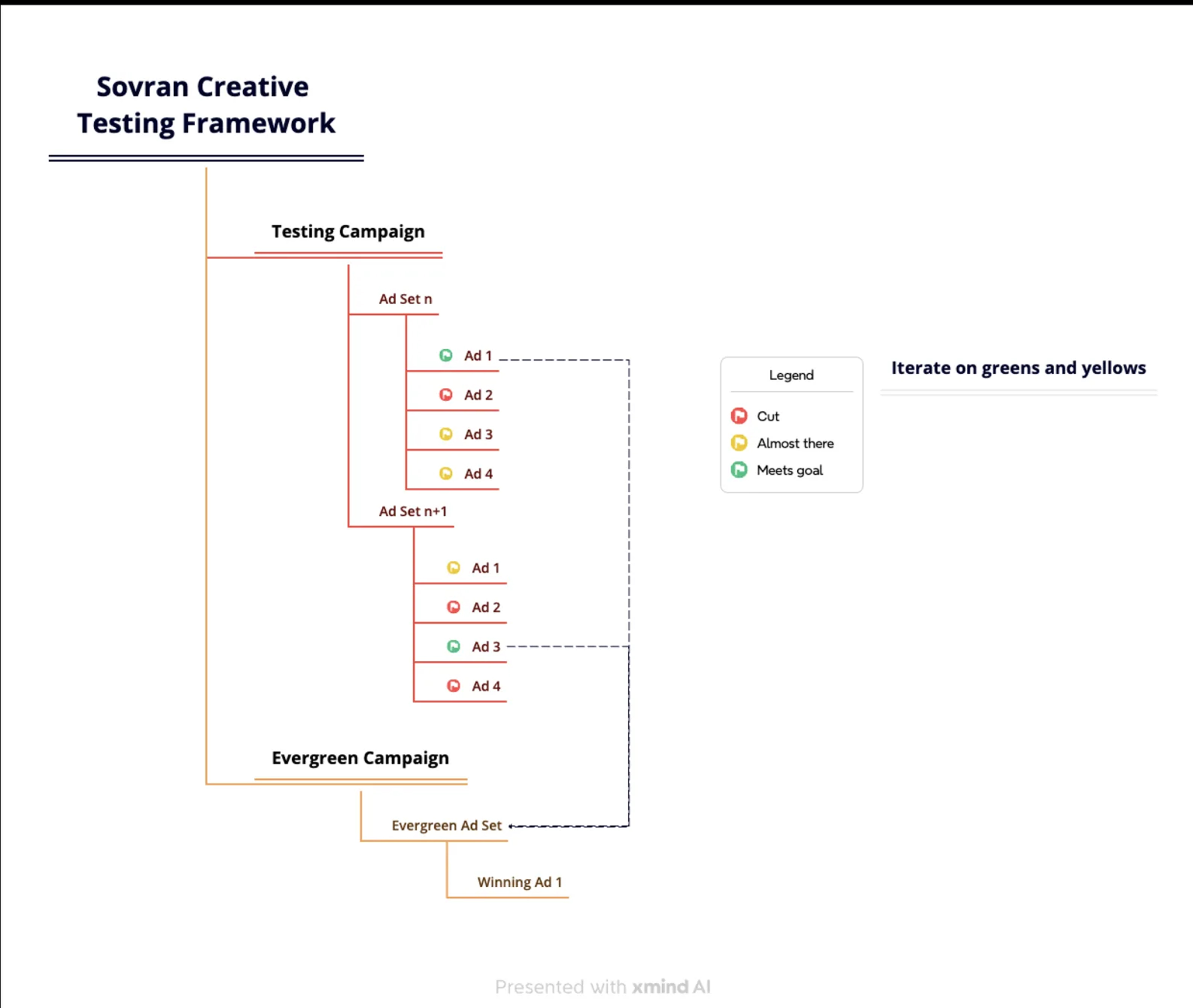

Creative testing framework

First things first – you need a dedicated testing campaign. This isn’t just another campaign; it’s your testing laboratory. Here’s how to structure it:

- Create separate ad sets for each concept you want to test

- Include 4-6 ad variations in each ad set

- When testing hooks (this is crucial), test 4 different text hooks or visual hooks at a time

Here’s the thing about testing hooks – you’ve got to keep everything else exactly the same. Why? Because if you change multiple elements, you’ll never know what actually made the difference. It’s like trying to figure out which ingredient made your recipe better when you changed five things at once.

Think of your ads like a traffic light system:

- Green ads: These hit your KPI goals. They’re your winners – send them straight to your evergreen ad sets

- Yellow ads: They’re showing promise. Keep iterating on these

- Red ads: They’re not working. Time to let them go and move on

Here’s what works for targeting:

- Go broad with country-level targeting

- Aim for highest volume delivery

- Start with $100-200 per day on those ad sets

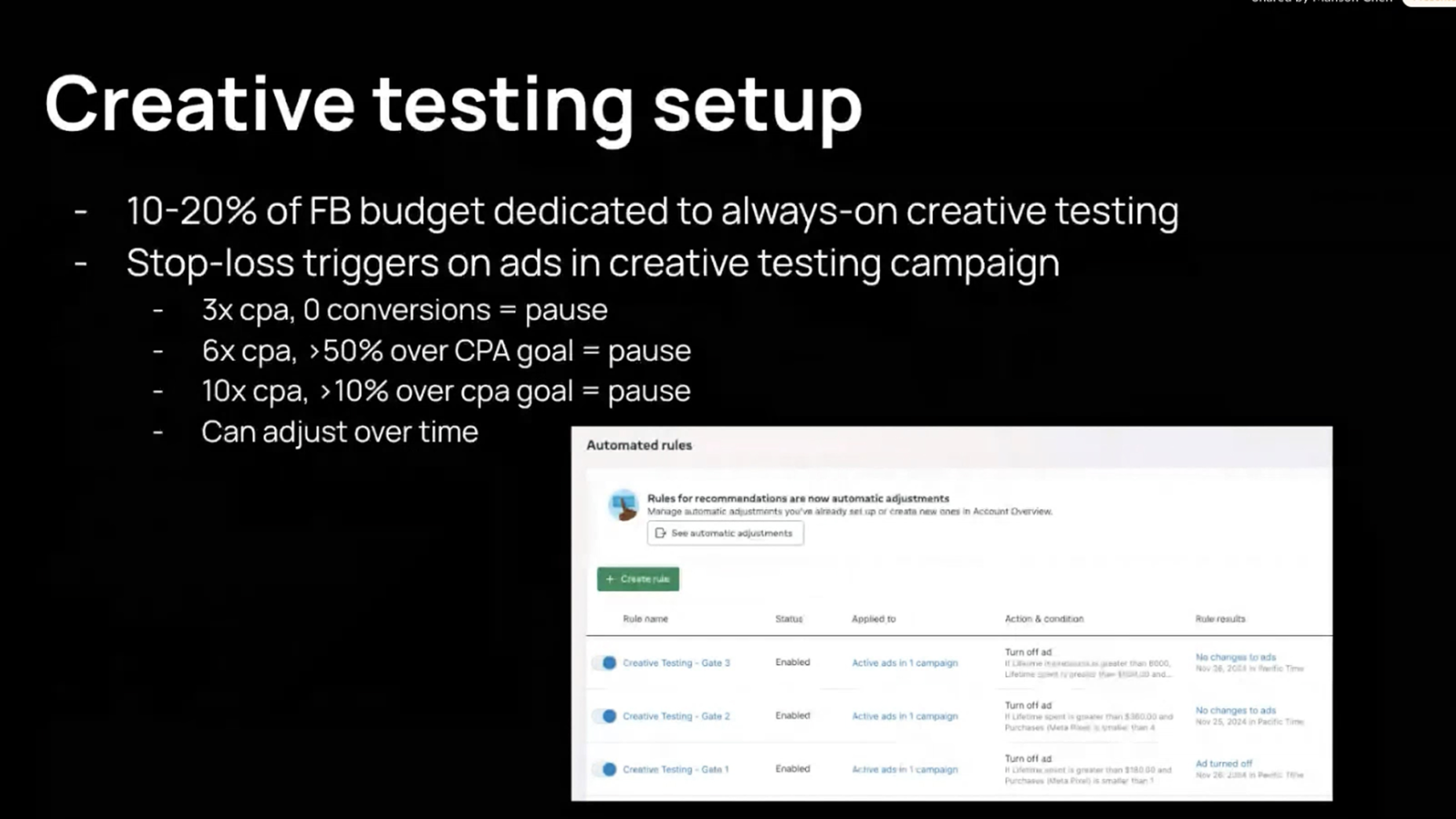

- Dedicate 10-20% of your Facebook budget to always-on creative testing

Pro tip: Don’t be afraid of broad targeting at this stage. You want enough data to make informed decisions.

Stop loss triggers

Let’s say your cost per trial goal is $50. Here’s how to set up your stop-loss triggers:

First gate: If an ad has spent $150 (that’s 3X your CPA) and hasn’t gotten a single trial – pause it.

You can set up additional gates based on your specific situation, but having these automatic triggers saves you from throwing good money after bad ads.

Scaling winners: the Post ID secret

When you find a winner, don’t just duplicate the ad. Instead, use the post ID. Why? Because duplicating creates a new post and fragments your social proof. We actually A/B tested this with a client, and using post IDs performed better.

How to get that post ID:

- Go to Ads Manager

- Look at Facebook posts with comments

- Find the numbers after “post/” in the URL – that’s your post ID

Evergreen campaign setup: how to scale winning creatives

Once you’ve got winners, here’s how to scale them:

- Use bid caps or cost caps (crucial for controlling costs)

- Set a really high daily budget

- Let your cost goals be the limiting factor, not your budget

- Keep that targeting broad

- Don’t forget to exclude current subscribers

- Keep feeding it fresh creative

Sometimes ads won’t take off right away in an ad set with existing winners. That’s because Facebook keeps delivering to what’s already working. Solution? Set up a separate ad set for new winners. When I was at Smartly, our data science team found that about 6 ads per ad set was the sweet spot. And guess what? Facebook recommends the same thing.

If you’re fortunate enough to have a Facebook rep, get your account whitelisted for bid multipliers API. This lets you bid higher on high-value demographics (like females over 40) and lower on others (like 18-24 year olds). You keep your broad targeting but optimize where your money goes. Smart, right?

Remember, testing isn’t just about finding winners – it’s about finding winners efficiently. Follow this structure, and you’ll be able to test more creatives while keeping your budget under control.

Creative testing measurement for web-to-app

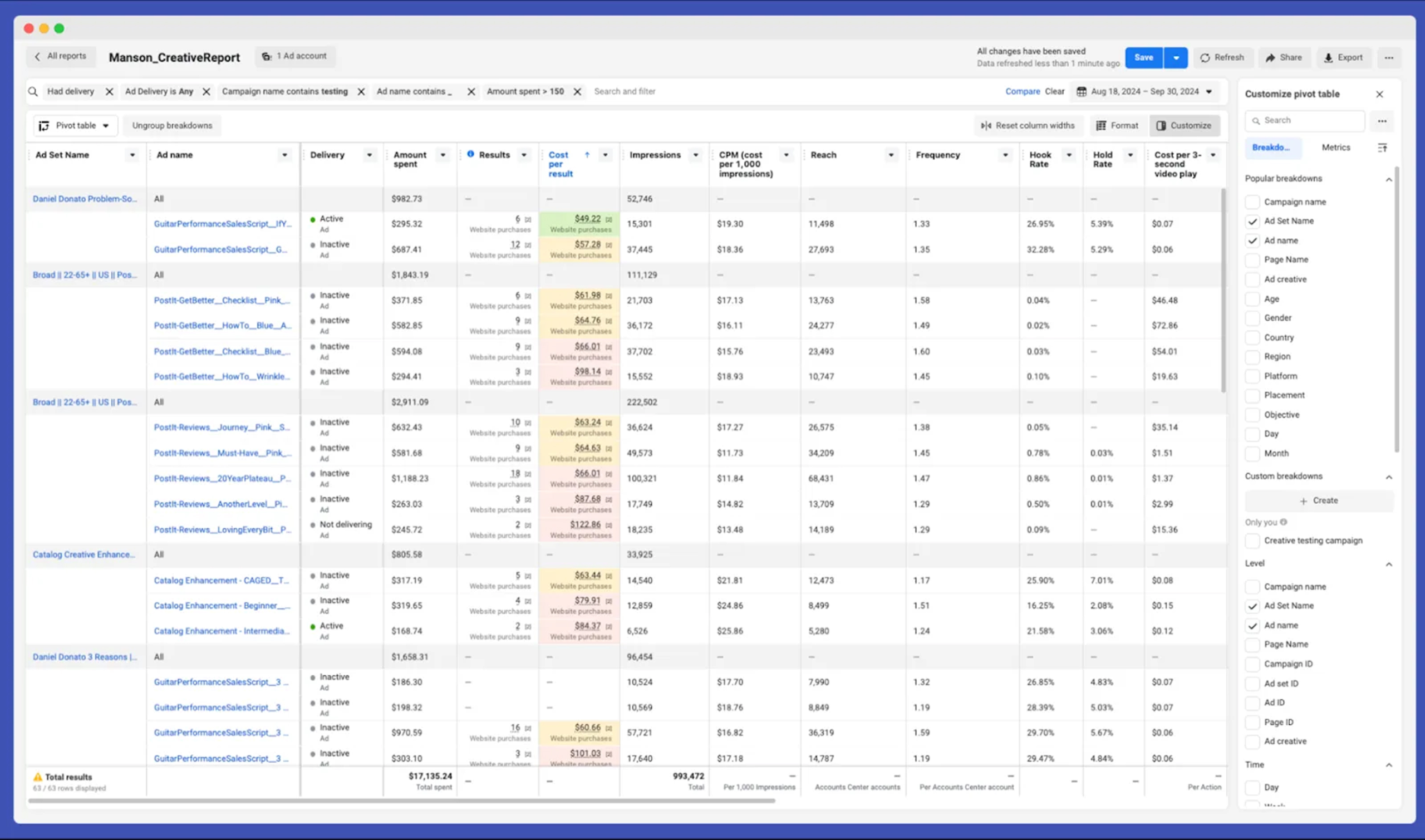

Facebook’s free ad reporting tool is super easy to use and set up. Set up color codes based on cost per result or CPA. You can look at hook rate and hold rates and all these other metrics for videos.

Monitor creative hit rate, which is the number of creatives that hit your KPI goal, CPA goal, divided by the number of creatives that you’ve tested. Around a 10 percent creative hit rate is very good. When you’re testing video ads, benchmarks include:

- Hook rate (three-second video view divided by impression): Above 30%

- Hold rate (through play or 15-second video view divided by impression): Aim for 10%

- Click-through rate link: Above 1%

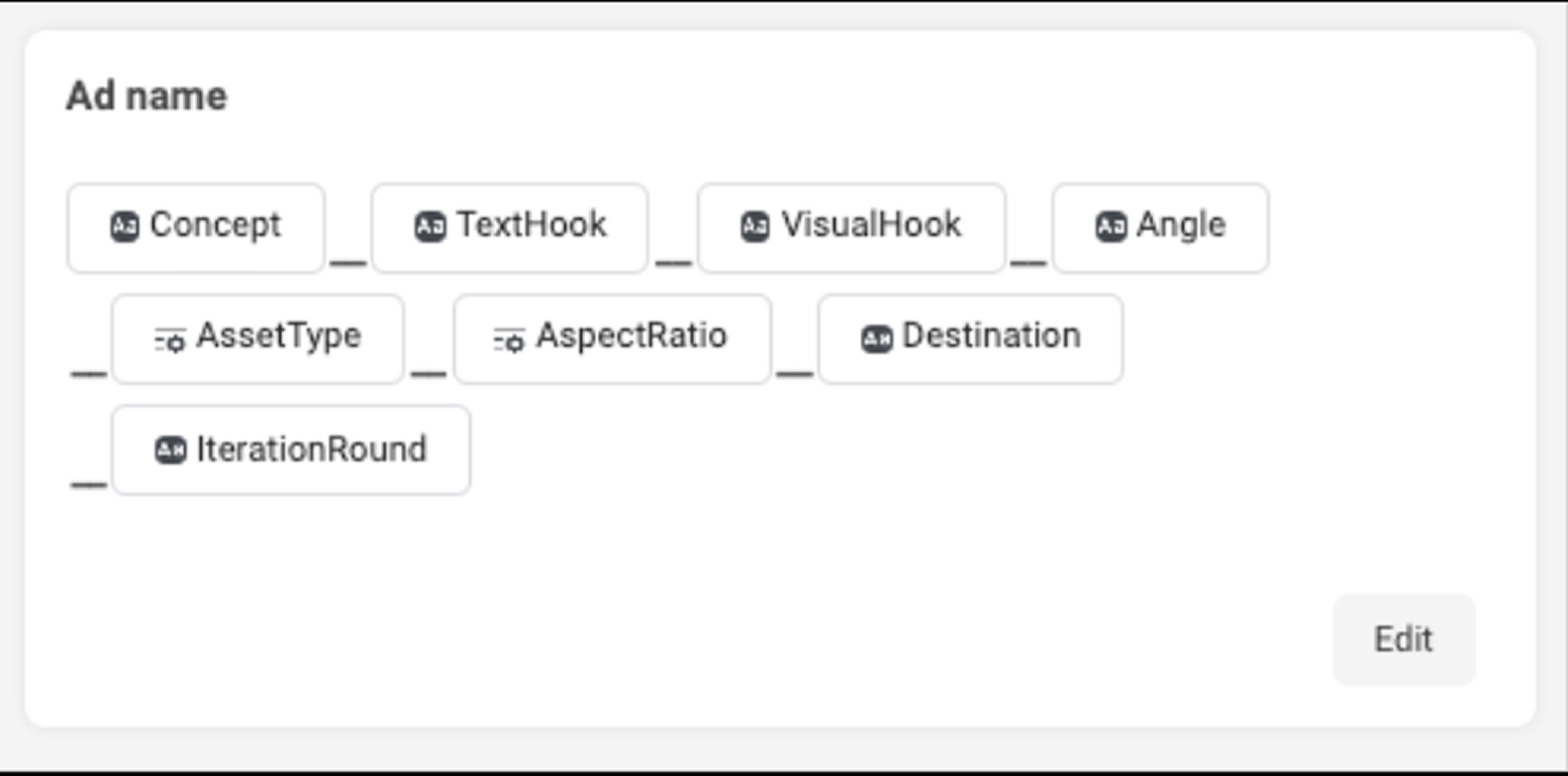

Very important: You need to follow a standardized naming convention at the ad level so that you can slice and dice in Excel, do some split part magic, do a pivot table to see what concepts are working, text hooks, visual hooks, etc.

Here’s an example of an ad name template:

And finally, keep an open mind because you never know which creative will work. Just keep testing and experimenting.