For this blog post, we’re joined by Samet Durgun (the “Growth Therapist”), who brings over a decade of hands-on experience helping mobile and subscription apps scale profitably through revenue-focused acquisition, creative strategy, and performance marketing.

Mobile attribution in 2026 doesn’t work the way it used to. Privacy changes like Apple’s ATT and SKAN fundamentally changed what’s measurable. Growth teams now work with aggregated signals that arrive 24-48 hours late instead of real-time user-level data.

But there’s a bigger problem most teams face in 2026: having attribution doesn’t mean you understand what drives revenue. Top apps stopped asking “where did installs come from?” They started asking “where did paying subscribers come from?” The shift from install attribution to revenue attribution changed everything about how successful apps measure performance.

How does SKAN attribution work?

Apple’s App Tracking Transparency changed everything when it launched with iOS 14.5 in April 2021. The impact was immediate and severe:

- Only 35% of iOS users opt into tracking today;

- This makes 65% of your iOS audience invisible to traditional attribution methods.

SKAN was Apple’s response to this tracking crisis, but it came with a new set of constraints. Built around strict privacy safeguards, SKAN delivers data that is aggregated, anonymized, and delayed, making real-time optimization extremely challenging.

With iOS 16.1, Apple introduced SKAN 4.0, expanding attribution capabilities by supporting re-engagement and multiple conversion values across different time windows.

In 2024, Apple went a step further and announced AdAttributionKit as SKAN’s successor, positioning it as the future of privacy-preserving attribution. Yet the core limitations remain unchanged: data is still aggregated, reporting is delayed, and you still can’t see creative-level performance.

Ad platforms are building their own solutions to work within privacy constraints. Meta developed Aggregated Event Measurement (AEM) and Google introduced Integrated Conversion Measurement (ICM).

Alternatively, web-to-app funnels have emerged as a distinct attribution path, shifting measurement upstream to the web.

What are SKAN’s postback windows?

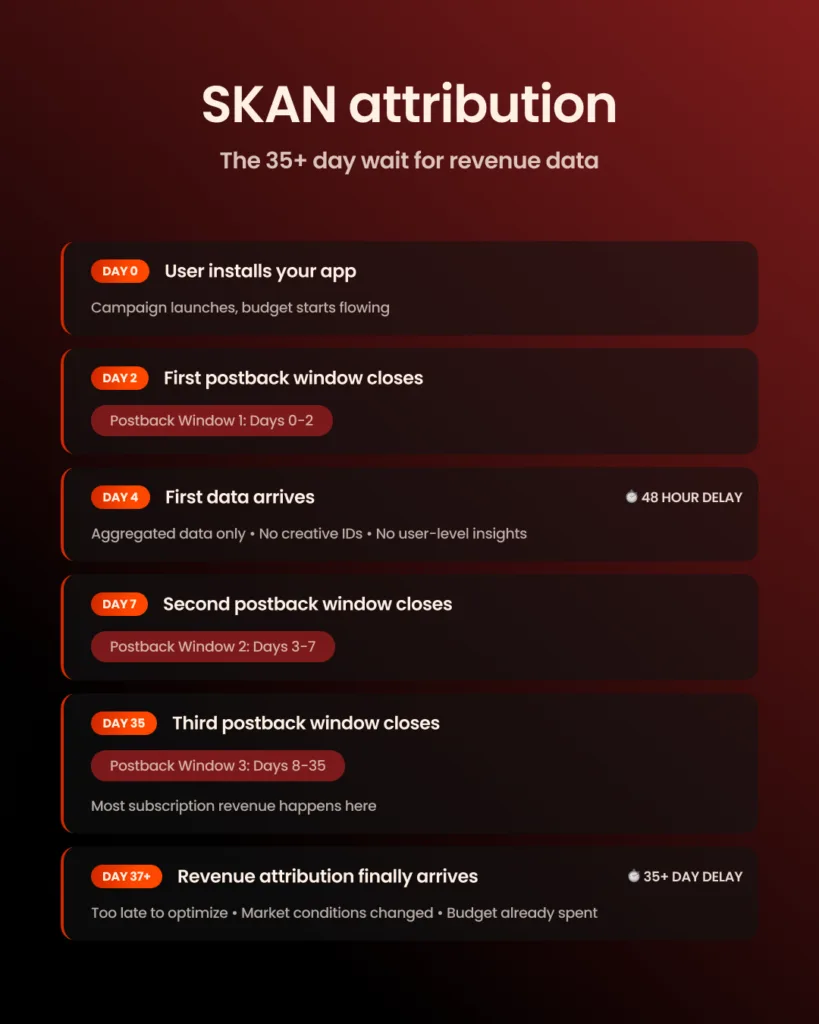

SKAN measures conversions across three distinct time windows, each with different delay periods:

- First window (0-2 days post-install): Conversion data arrives 24-48 hours after the window closes;

- Second window (3-7 days post-install): Additional delay for mid-funnel events;

- Third window (8-35 days post-install): Final revenue data arrives 35+ days after install:

This creates a measurement challenge. Early conversion signals arrive within 2–4 days. But measuring week-two or week-three subscription renewals means waiting 35+ days for the data to arrive.

For subscription apps where trial-to-paid conversion happens on day 7 or day 14, the most meaningful revenue signal appears weeks after the ad budget has already been spent.

As a result, most apps focus on the first postback window. Yet what they optimize for within that window varies significantly depending on their monetization model:

- Apps with direct purchases optimize for maximizing purchase count, or if they’re more mature, they optimize for purchase value (especially useful with multiple price points).

- Subscription apps running free trials typically optimize for trial starts. But teams know not everyone converts, so “Trial+” custom events are getting more popular. For example: “trial started but didn’t cancel within 2 hours.” More advanced setups use pLTV signals from external prediction tools.

- Some apps have both trials and direct purchases. They either pick one to optimize for, or combine them into a single custom event.

Why does mobile attribution feel broken in 2026?

Aggregated data hides performance

SKAN’s privacy constraints force all your data into aggregated buckets. You can see campaign-level performance in your MMP dashboard. You know which campaigns drove installs.

But here’s what you can’t see:

- Which creative performed best;

- Which ad variation drove conversions;

- Which audience segments responded to which creatives;

- Which creative + audience combinations work.

You’re looking at aggregated performance across all creatives in a campaign. This makes it impossible to identify winning variations. Run five different creatives and you’ll see combined results, but you won’t know which specific ad delivered your best results. That gap between data visibility and actionable insight is where modern app attribution tracking breaks down.

Feedback loop doesn’t work

SKAN 4.0 delivers conversion data with significant delays, from 24–48 hours for early events to 35+ days for later conversion windows. That means the creative driving results today might already be underperforming by the time you see the data.

This lag makes rapid creative iteration almost impossible. Effective performance marketing depends on tight testing cycles:

- Launch a creative

- Measure the response

- Adjust the strategy

- Repeat

When measurement comes with a two-day delay (or worse, a 35-day delay), optimization is always one step behind. Instead of responding to real-time user behavior, growth teams are forced to optimize for yesterday’s market.

The problem extends to ad platforms as well. Networks like Meta and TikTok depend on timely conversion signals to optimize delivery. When those signals arrive late and in an aggregated form across all creatives, the algorithms struggle to learn. The result: slower optimization, weaker performance, and limited scalability.

Install attribution ≠ revenue attribution

Traditional mobile attribution tools measure installs exceptionally well. They’ll tell you exactly:

- How many users downloaded your app;

- Which campaign drove them;

- How much you paid per install;

- Geographic distribution of installs.

For high-level channel decisions, this data works fine.

The problem: you’re measuring the wrong outcome. A campaign that drives 10,000 installs at $2 CPI might deliver zero paying subscribers. Another campaign driving 2,000 installs at $5 CPI might convert to 200 subscribers.

Without revenue attribution, you’d scale the first campaign and pause the second—the exact opposite of what you should do.

The gap between “user acquired” and “revenue generated” is a black box in most app install attribution systems. You’re optimizing for a proxy metric (installs) instead of the actual outcome you care about (revenue). When attribution only reflects install performance, decisions tend to maximize volume while quietly eroding profitability.

What can in-app mobile attribution still track accurately?

Here’s what mobile attribution tracking can still measure reliably in 2026:

| What Works | What you get | Limitations |

| Campaign-level performance | Which ad networks drive volume, high-level ROAS signals, broad channel comparison (Meta vs TikTok vs Google) | No creative-level data |

| Install trends | Daily install volumes, geographic distribution, basic demographics (where available) | Aggregated only |

| Post-install events | SKAN conversion values track key actions | 64-value limit, careful schema design required, data aggregated & delayed |

| Re-engagement campaigns | SKAN 4.0+ supports retargeting measurement | Similar constraints as acquisition |

Attribution data still flows reliably into your MMP. Install volumes, CPI, and basic post-install events remain accurate enough for high-level decisions, such as which channels or platforms to invest in.

SKAN also provides conversion values within Apple’s privacy thresholds. When volume is sufficient, you get aggregated insight into post-install behavior, but with clear limits. Data is capped by SKAN’s 64-value limit, making careful conversion value design essential to capture the signals that matter most.

What conversion values actually tell you

SKAN conversion values can track post-install actions, such as trial starts, purchases, or key feature usage. But you’re limited to 64 distinct values across your entire measurement framework.

Most apps use conversion values to track:

- Revenue tiers (e.g., $0-10, $10-50, $50+);

- Funnel completion stages;

- Time-to-conversion windows;

- Engagement levels.

The challenge is prioritization. Do you track day-1 revenue, day-3 revenue, or day-7 revenue? Do you measure subscription starts or actual paid conversions? Every choice requires trade-offs because the 64-value limit forces you to decide what matters most.

Apps that succeed with SKAN attribution have clear measurement priorities. They know which metrics actually predict long-term value and design conversion schemas around those signals.

In reality, the most advanced subscription apps have moved beyond SKAN entirely. They work with web-to-app flows, server-side event tracking (CAPI), and custom optimization events that give them real-time revenue signals without SKAN’s constraints.

What can’t in-app attribution measure well anymore?

Creative-level revenue impact is invisible

SKAN doesn’t pass creative IDs to advertisers. You can’t determine which ad variation drives subscriptions. Run five different creatives in a campaign and you’ll see aggregate performance across all five, but you won’t know which specific ad converted your highest-value users.

This creates a testing blind spot:

- Launch creatives without knowing which will perform;

- Wait days for aggregated signal;

- Spend budget on underperforming variations before you know they’re failing;

- Miss opportunities to scale winners while they’re hot.

By the time you get data, you’ve already spent budget on underperforming variations. The result is slower iteration, higher waste, and worse performance.

Funnel-step optimization hits a wall

Your App Store page has limited visibility in attribution tracking. You can’t A/B test store screenshots, copy, or preview videos with attribution. You can’t see which page elements correlate with quality users who actually subscribe.

Growth teams are optimizing on incomplete signals, guessing which store assets work based on overall install volume rather than revenue outcomes.

In-app paywalls have similar visibility problems:

- Track paywall events (views, dismissals, purchases);

- Can’t attribute revenue (don’t know which ad drove the purchase);

- No ad-to-paywall connection (can’t determine which ad → paywall combo converts best);

- Disconnected optimization (paywall testing happens in isolation from acquisition).

This means paywall optimization happens in isolation from acquisition. You’re testing offers without knowing which traffic source responds to which offer. Your funnel optimization becomes disconnected from your media buying.

Volume thresholds create blind spots

Apple’s privacy thresholds require approximately 100-150 installs per day per campaign before SKAN returns conversion values. Below that threshold, you get null values—complete data darkness.

But meeting Apple’s minimum doesn’t guarantee success. Ad platforms have their own requirements on top of Apple’s:

- Apple’s threshold: 100-150 installs/day to get conversion values;

- Meta’s SKAN threshold: 88 installs/day to receive conversion values (instead of CV=null);

- Combined requirement: Need to meet both before getting usable data.

Smaller campaigns return no data from Apple and provide insufficient signal to ad platforms. Testing new channels becomes prohibitively expensive. You can’t validate new markets at small scale because you need significant volume just to see if something works, plus additional volume for the ad platform to deliver conversion data.

Testing a new traffic source requires substantial budget commitment upfront. You need enough spend to hit volume thresholds before you’ll see any performance data. This makes experimentation costly and risky, especially for smaller apps with limited budgets.

The economics of blind testing

Consider the math:

- At $5 CPI: Hitting 100 installs/day requires $500 daily spend per campaign;

- Testing 3 campaigns: $1,500 per day or $45,000 per month;

- Just for basic data: Not even guaranteed good performance.

For apps with lower LTV or smaller budgets, this creates a tricky loop. You need data to optimize, but you need a significant budget to get data. The result is that many apps either overspend on blind testing or avoid testing new channels entirely.

Data quality become guesswork

The result of the constraint covered above is a fundamental shift in how teams approach attribution:

- Stop expecting perfect numbers: Watch for trends and patterns instead of trusting individual data points

- Sanity-check everything: Cross-reference MMP data against App Store and Play Console dashboards manually

- Use MMPs as data collectors, not truth sources: Build your own fraud detection and validation layers on top

Nobody’s getting clean, reliable attribution anymore. The entire attribution stack has turned into guesswork. Teams that once relied on precise partner-level attribution now focus on spotting anomalies and watching for consistent trends rather than trusting individual data points.

What’s the difference between install attribution and revenue attribution?

The fundamental problem with mobile attribution in 2026 is simple: you’re measuring the wrong thing.

| Metric | Install Attribution | Revenue Attribution |

| What it measures | Who installed your app | Who became a paying customer |

| Optimization goal | Lower CPI, higher install volume | Higher LTV, profitable ROAS |

| Visibility | Campaign → Install | Campaign → Creative → Funnel → Purchase |

| Speed | Delayed (24-48hrs with SKAN) | Real-time (with web flows) |

| Decision quality | Optimizing for volume | Optimizing for value |

| Common mistake | Scaling cheap installs = low-quality users | Scaling expensive installs might = high-LTV subscribers |

What revenue attribution actually needs:

- Ad-level tracking → funnel events → payment confirmation;

- Connected data across the entire user journey;

- The ability to see the complete journey from ad impression to subscription.

All-in-all, revenue attribution requires connected data across every touchpoint.

How do web-to-app funnels solve the attribution problem?

Some of web-to-app set-ups move attribution to the web, where tracking still works properly. This means clean, deterministic attribution tracking using standard web tools: UTM parameters, pixels, cookies.

There are three types of web2app setups, each with different measurement implications:

- Ad → web link → App Store → install: Still relies on traditional MMPs for attribution, faces same SKAN constraints;

- Ad → web onboarding → App Store → install → payment in app: Captures early funnel data on web but still needs MMP for revenue attribution;

- Ad → web onboarding + web payment → app unlocks via login: Complete attribution from ad to revenue, no MMP needed—standard web analytics (GA4, Amplitude) work fine.

The key distinction is where the first payment happens. The third setup—where users pay on web before installing—gives you the full attribution clarity.

What you get with web attribution:

- No ATT prompts required;

- No SKAN delays;

- User-level data where regulations allow;

- Complete visibility into ad, creative, and audience.

The advantage is simple: you know exactly which ad, creative, and audience drove the click before any install happens. When users later download your app, you’ve already captured complete attribution data on the web side.

This solves the privacy challenge by moving measurement to an environment where traditional tracking methods still function.

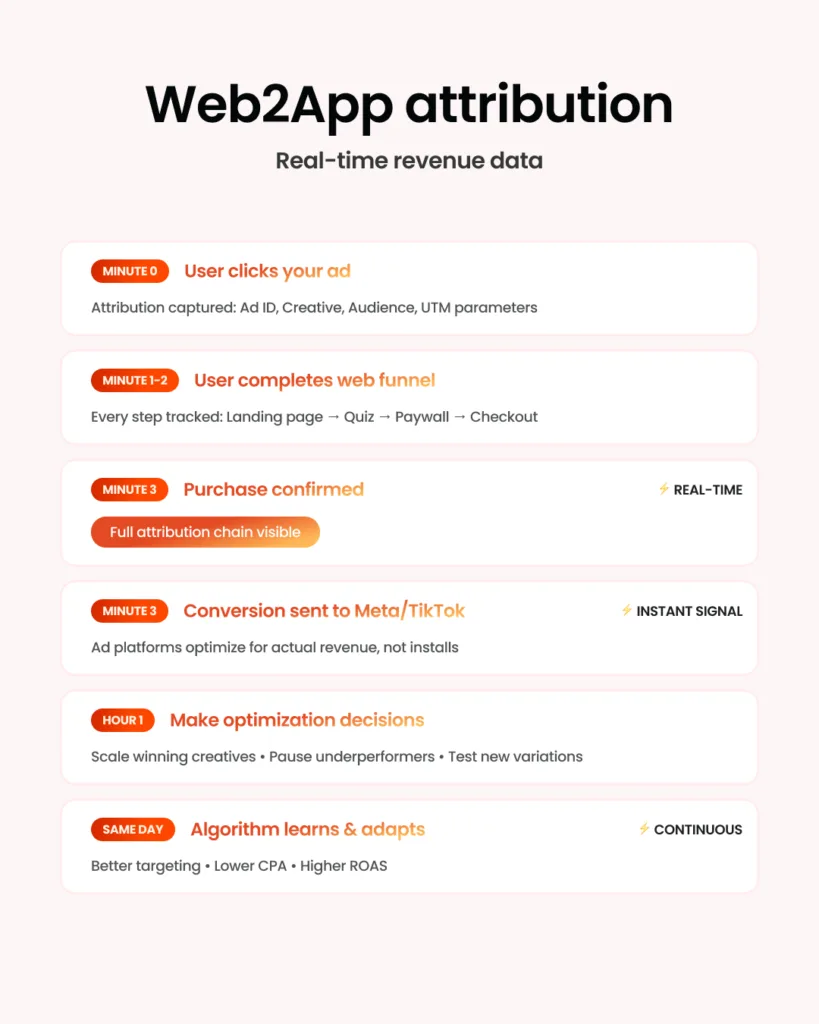

Every step is trackable

Web funnels give you clear ad → click → funnel → revenue visibility. You can see exactly where users drop off. Each step can be A/B tested independently, making it possible to optimize for revenue instead of installs.

What this unlocks:

- See precisely which funnel step loses users: Identify drop-off points in real-time;

- Test offers, copy, and design variations: Proper statistical significance on all tests;

- Optimize campaigns for purchase conversion: Not install volume.

But the real power comes from connection to ad platforms. You can feed conversion events—purchases, subscriptions—to Meta, TikTok, and Google immediately. Their algorithms optimize for actual purchases, not app installs. Your campaigns get smarter faster.

Faster, cleaner feedback loops

Web attribution provides real-time data. A user completes a purchase and the conversion fires immediately. You see performance within minutes, not days:

This speed changes everything:

- Underperforming campaigns get turned off the same day;

- Creative variations get validated or killed within hours;

- You iterate faster because the feedback loop actually works;

- Algorithms learn from clean, immediate signals.

The operational impact is significant. Instead of waiting two days to see if a creative works, you know within hours.

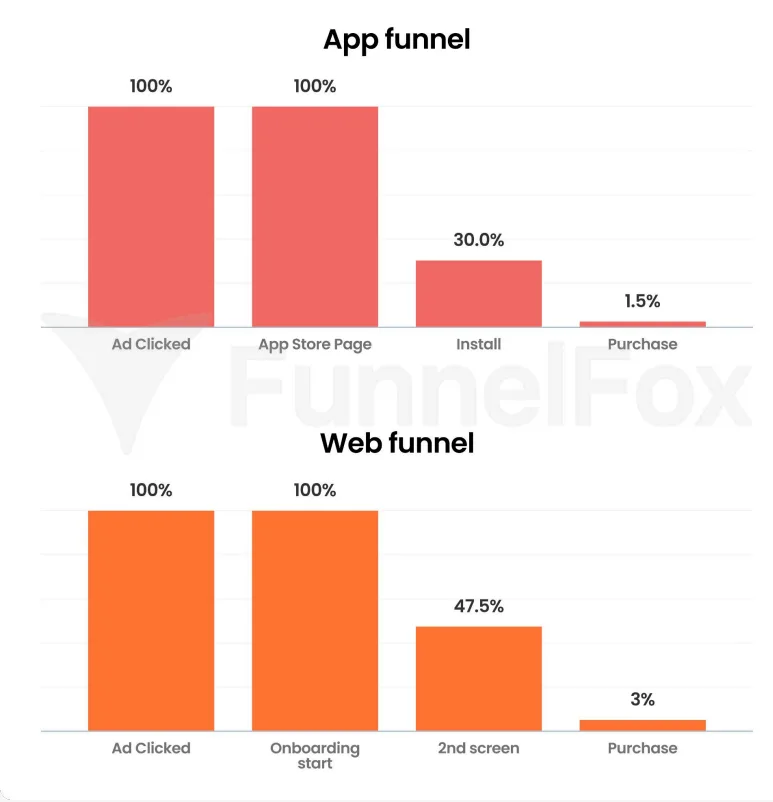

Higher conversion rates

Better attribution leads to better optimization, which leads to algorithm training that actually works. Web-to-app funnels consistently deliver higher conversion rates than direct-to-app campaigns.

According to the FunnelFox State of Web2App Report:

- Web funnels: 3% click-to-purchase conversion rates;

- Traditional app campaigns: 1.5% install-to-purchase rates;

- Result: 2x better conversion with web2app.

The reason is straightforward: you can optimize every step of the funnel. You see where users drop off and fix those steps. Ad algorithms receive clean purchase signals and deliver better quality traffic. You’re measuring and improving the metrics that actually matter—conversion to paying customer, not just conversion to install.

What does web2app attribution enable that in-app can’t?

Here’s what becomes possible when you move attribution to the web:

| Capability | In-App Attribution | Web2App Attribution |

| ROAS visibility | 24-48hrs+ (SKAN delays) | Minutes (real-time) |

| Creative testing | Blind testing, slow iteration | Multivariate tests, same-day results |

| Paywall testing | Limited variants, slow rollout | 10+ variants simultaneously |

| Revenue attribution | Install-level, aggregated | Creative + audience + offer level |

| Traffic sources | UAC, app-specific networks | Google Search, affiliates, influencers, any web channel |

| Store fees | 15-30% commission | 3-8% payment processing |

| Optimization speed | Days to make decisions | Hours to scale/cut campaigns |

Web-to-app attribution gives you capabilities that simply don’t exist in traditional app attribution tracking:

- Creative-level revenue visibility: See exactly which ad creative drives paying subscribers, not just installs;

- Full funnel control: A/B test every element from landing page to payment—impossible when the App Store sits in the middle;

- Real-time optimization: Make decisions based on current data, not 48-hour-delayed aggregates.

Shmoody, a mood and habit tracking app, achieved an 80% ROAS increase in 3 months using FunnelFox’s web2app funnel solution. They eventually shifted 70% of their UA budget from mobile to web because the attribution clarity unlocked better optimization.

→Read the full case study

Where does FunnelFox fit in?

Building web funnels traditionally means months of development work. You need:

- Landing pages

- Payment processing

- Deep linking infrastructure

- Attribution setup

- Subscription management

- A/B testing framework

Most teams spend 3-6 months building this infrastructure before they can test anything. FunnelFox gives you the complete infrastructure out of the box.

What FunnelFox provides:

- No-code funnel builder: Launch web2app flows in hours, not months. Drag-and-drop interface for building landing pages, quiz flows, and payment screens. No technical skills needed—marketing teams can build and iterate independently.

- Integrated attribution: Auto-connect ad platforms to funnel events to revenue. Clean revenue attribution tracking from day one without complex setup. Data flows automatically to Meta, TikTok, Google, and your analytics tools.

- Built-in payments: Accept payments on web, automatically sync subscriptions to your app. Handle the complexity of payment processing, subscription management, and app store receipt validation. Support for multiple payment providers and methods.

- A/B testing: Test offers, paywalls, and onboarding flows with proper statistical analysis. Know what works before you scale, with built-in experiment tracking and significance testing. Run multiple tests simultaneously without manual calculation.

- Revenue analytics: See which campaigns drive LTV, not just installs. Track full-funnel metrics from ad impression to long-term subscription value. Understand which acquisition channels deliver the most profitable customers.

→ Schedule a demo to see real-time attribution in action.

What should you do next?

If you’re frustrated with current attribution

Start by auditing what you’re actually optimizing for. Are you optimizing for installs or revenue?

Calculate the cost of delayed data:

- Review the last 90 days of spending;

- Identify campaigns you would have paused earlier with real-time attribution;

- Calculate wasted budget on underperforming campaigns before SKAN data arrived;

- That’s money you could have saved with faster feedback loops.

Identify your biggest blind spots:

- Creative performance: Not knowing which ad variations drive revenue;

- Audience quality: Understanding which targeting drives high-LTV subscribers;

- Offer testing: Validating pricing and trial structures;

- Funnel optimization: Knowing where users drop off.

Knowing where attribution tracking fails you is the first step toward fixing it.

Consider web2app if you:

☑️Need faster feedback on campaign performance. If you’re testing new creatives weekly or running dynamic campaigns that require quick decisions, real-time attribution changes everything.

☑️Want to test offers and pricing outside app stores. Apple and Google limit what you can test through their platforms. Web2app gives you complete control over offers, trials, and pricing variations.

☑️Are ready to optimize for revenue instead of installs. If you’ve outgrown simple volume metrics and need to understand what actually drives profitable growth, revenue attribution is essential.

☑️Have budget to experiment with new acquisition channels. Web2app requires upfront investment in funnel building and testing, but the attribution clarity often delivers ROI quickly.

Start small, scale what works

Run web2app as an additional channel alongside your existing app campaigns. Don’t replace everything at once—test first, validate results, then scale.

Start with one traffic source:

- Meta works well for most apps because of its optimization capabilities and audience targeting options;

- Get the attribution tracking working properly;

- Validate that data flows correctly;

- Prove the concept with one platform before expanding.

Compare revenue attribution from web2app to your traditional MMP data. Run parallel campaigns—one direct-to-app, one web2app—and measure the difference in attribution clarity and optimization capability. You’ll quickly see where web provides clearer insights into what actually drives revenue.

Scale the channels where web-to-app attribution tracking gives you better visibility and better results:

- If Meta campaigns show dramatically better attribution with web2app, shift more budget there;

- If certain audience segments convert better through web funnels, focus your growth efforts accordingly;

- Let data guide your budget allocation decisions.

Wrapping-up

Install attribution still has a role in measuring high-level channel performance. You need to know which platforms drive volume and what your basic acquisition economics look like. That data remains valuable for strategic decisions.

But revenue decisions require revenue attribution. Understanding which campaigns, creatives, and audiences drive paying subscribers—not just installs—is the only way to scale profitably in 2026.

Web-to-app funnels provide the attribution clarity that in-app tracking can’t deliver anymore. If you’re serious about understanding what drives profitable growth, web2app isn’t optional. It’s the only way to see what’s actually working.