Most app teams run A/B tests. Few run them well.

When should you peek at results, and when should you wait? How do you pick metrics that make difference? What’s the right sample size and how do you know when to stop? And with AI creeping into every workflow, what changes when experiments run themselves?

In a live session, Phil Carter (Elemental Growth, ex-Faire, Quizlet, Ibotta), Joe Wilkinson (Chess.com, ex-Meta), and Andrey Shakhtin (FunnelFox) shared how they approach these challenges in practice.

Here are the takeaways.

If you’d rather watch the full session, the recording is linked at the end of this article.

Should you peek at results before a test ends?

Let’s be honest: everyone does it. You launch an A/B test, and within 48 hours, someone’s refreshing the dashboard, even though they know they’re not supposed to.

The theory vs. reality gap

In theory, you set your minimum detectable effect, calculate your sample size and runtime, and don’t touch the data until the test is done. That’s how you get clean, statistically sound results. But in the real world — especially at startups — things aren’t so clean.

Phil Carter from Elemental Growth puts it simply: “There are a lot of rules that get broken at startups.” If early results look strong, it’s tempting to call a test early — even if the stats team says not to.

The pressure is real. Executives want answers fast. And if you’ve been running a test for two weeks, and it’s clearly winning, do you really need to wait another two?

The textbook answer is yes. But the practical answer? Use judgment.

What Chess.com does

Chess.com runs a lot of experiments, especially in monetization. For high-stakes tests, they aim for 98% statistical significance (stricter than the usual 95%) before making rollout decisions.

But even with that standard, things aren’t always straightforward.

In those cases, the team often chooses to iterate on the stronger variant rather than roll it out as-is.

And that discipline pays off. One test — reordering paywall tiers — looked like a clear win in the first few days. But the uplift faded fast. “Diamond on the far left ended up negative,” Joe admitted. If they’d rolled it out early, the long-term impact would’ve been worse than control.

So… peek or don’t?

Looking at interim results isn’t a crime, as long as you don’t make decisions based on early excitement. Novelty effects are real. What looks like a win today might flatten (or turn negative) tomorrow.

The trick is to define up front:

- How long you’ll run the test,

- What significance level you need,

- What would justify stopping early (if anything).

Then stick to it, or at least be very clear when you’re breaking your own rules.

How many experiments are enough?

With more than 200 million registered users, Chess.com has no shortage of data to work with. The company runs about 55 experiments per quarter across all teams, from monetization to trust & safety, gameplay, and learning. On the monetization side alone, around ten tests are active at any given moment, covering everything from membership pages and pricing to upsell emails and reminders.

But even with that volume, it’s still not enough — the company’s Chief Growth Officer has set a target of 1,000 experiments per year.

How not to get lost in the noise

But with that level of activity comes risk: noise, complexity, and confusion. The antidote, Joe says, is to start every experiment with a tightly defined hypothesis:

We think A will lead to B because of Y.

That one sentence keeps everyone aligned and limits unnecessary add-ons. Otherwise, “the moment you get your designer and your devs and leadership involved,” Joe warns, “things start getting added.”

This isn’t just theory. Small tweaks like changing a CTA or adding a sticky bar have produced massive lifts. But those only worked because the team avoided overloading the variant, resisting the urge to test everything at once.

Building an experimentation culture that scales

Just get started

For many companies, A/B testing feels intimidating at first. Should you build an internal platform? Buy one? What if you mess up the stats? According to Phil Carter, the most important thing is simply to begin:

From early tests to compounding advantage

As companies grow, the challenge shifts from “how to start” to “how to scale”. Running hundreds or thousands of experiments per year takes serious infrastructure, clean processes, and a strong testing culture.

But it’s worth it. Unlike ad campaigns that stop the moment the budget ends, product-led experiments create compounding impact. One good variant can keep paying off for months or years.

Think like a portfolio

Phil compares tests to portfolio thinking. Most teams hit about 50% success rate with their experiments. That’s normal. If your win rate is much higher, you’re probably not taking enough risks.

Treat your A/B tests like investments. A few wins will drive most of the growth, but you can only find them by testing at scale.

Experiment types and when to use them

Most teams default to classic A/B, and that’s fine. It’s simple, it works, and it’s where almost everyone starts. But once your testing program matures, it helps to know your options.

Classic A/B and A/B/n

A/B is what it sounds like: a control vs. a single variant. A/B/n takes that further by testing multiple variants side by side — A, B, C, D, and so on.

You’ll usually define one success metric upfront, estimate the expected lift, and run the test long enough to see if anything beats the control. This is the backbone of experimentation at most companies.

A/A testing: sanity check your setup

Sometimes it’s not about testing changes — it’s about testing your infrastructure. That’s what A/A is for. You run two identical variants to make sure the randomization is working and there’s no unexpected bias.

Joe shared a real case from Chess.com: mobile users with slow internet were disproportionately ending up in the control group because of how their test bucketing worked. An A/A test helped them catch that.

“Do No Harm” tests

In a traditional A/B, teams expect a measurable lift and agree not to roll out changes if that lift doesn’t materialize. A do no harm test flips this logic: the team already believes the new variant is strategically better, and only wants to ensure it doesn’t damage key metrics.

A good example: Chess.com had to comply with a new FTC regulation that made subscription cancellation easier. The test wasn’t about improving conversion, it was about making sure nothing broke.

In these cases, success isn’t a win, but rather a non-loss. You still track key metrics (like revenue or retention), but your bar is “no significant negative impact.” If that holds, you ship it.

Multi-armed bandit: powerful, but not for everyone

This one’s for the pros. Unlike A/B, where traffic is split evenly, multi-armed bandit dynamically reallocated traffic toward better-performing variants as the test runs.

Phil summarized both the appeal and the risk:

He noted that AI-powered platforms are making this more accessible. Tools like Helium are now offering out-of-the-box bandit-style testing for paywall optimization, backed by teams of specialists rather than in-house engineering.

At Meta, Joe’s trust & safety team applied multi-armed bandit to spam and abuse detection:

For most mid-stage companies, however, classic A/B and A/B/n remain the go-to. Bandit testing shines at massive scale, but its complexity and resource demands keep it out of reach for many teams.

Choosing the right metrics

For Chess.com’s monetization team, the primary metric is subscription paid rate. Supporting signals — trial starts, trial-to-paid conversions, and direct purchases — roll up into that number. Revenue is the ultimate goal, but subscription paid rate is the day-to-day measure of success.

To protect the product, every test also tracks guardrail metrics:

- Retention (1, 3, 7, 14, 30 days)

- Engagement with core features like games, lessons, puzzles, and reviews

These checks make sure monetization changes don’t hurt retention or activity.

Phil Carter emphasized the importance of focus:

Too many success metrics create false wins through statistical noise. For big, risky changes, Phil also recommends a premortem — anticipate worst-case outcomes and measure long-term impact.

When to stop a test?

On monetization experiments, Chess.com usually targets 98% statistical significance — stricter than the standard 95% — to reduce the risk of false positives. In practice, tests don’t always reach that threshold. Some run for weeks without hitting significance, even if one variant looks directionally stronger. In those cases, the team prefers to iterate on the variant rather than roll it out blindly.

This discipline helps avoid the trap of novelty effects. Early results often look exciting — for example, reordering the paywall tiers initially boosted conversions, but the effect faded within weeks and one variant even turned negative.

Phil Carter pointed out why waiting matters:

That’s why strong teams define sample size and runtime in advance, then stick to it. It’s fine to glance at interim results, but making rollout decisions before the test is complete is risky — especially if the spike is just short-term excitement.

Testing revenue and LTV impact

Metrics like ARPU, revenue, and LTV are harder to evaluate than simple conversion rates. Chess.com relies on Amplitude’s stats calculator, plus dedicated analysts and finance support, to ensure accuracy. Templates with built-in LTV values for each plan (monthly and yearly) allow the team to quickly estimate the long-term impact of pricing or paywall changes.

During his time leading growth at Quizlet, Phil Carter’s team tightly integrated experimentation results into financial planning. Percentage lifts in ARPU or retention were plugged into models with finance to forecast annual impact and report to the board and investors.

How AI is reshaping experimentation

AI has been part of chess for years through game engines, but applying it to product experimentation is relatively new. On the monetization team at Chess.com, the biggest value so far comes from co-piloting tasks:

- Using ChatGPT to review experiment documentation and surface gaps.

- Generating QA cases for the Friends & Family plan — edge scenarios the team hadn’t considered showed up immediately.

- Testing prototyping tools to speed up design mockups and handoffs, a critical step toward hitting the goal of 1,000 experiments a year.

- Sharing AI use cases across teams via dedicated Slack channels, from quick analysis to creative workflows.

Some experimentation platforms are going further by embedding AI into the testing engine itself. Helium focuses on paywall optimization, where the variables are limited — copy, visuals, pricing, badges. AI generates new variants automatically and runs multi-armed bandit tests to reach significance faster.

For early-stage teams, even lighter applications can be handy. As Andrey Shakhtin noted, it’s now possible to export a dataset and have GPT calculate significance on the fly — a shortcut that would have been unthinkable just a year ago.

Advice for teams just starting out

The first rule is simple: just get started. Overthinking tools and statistical details delays learning. Small, imperfect tests are better than none.

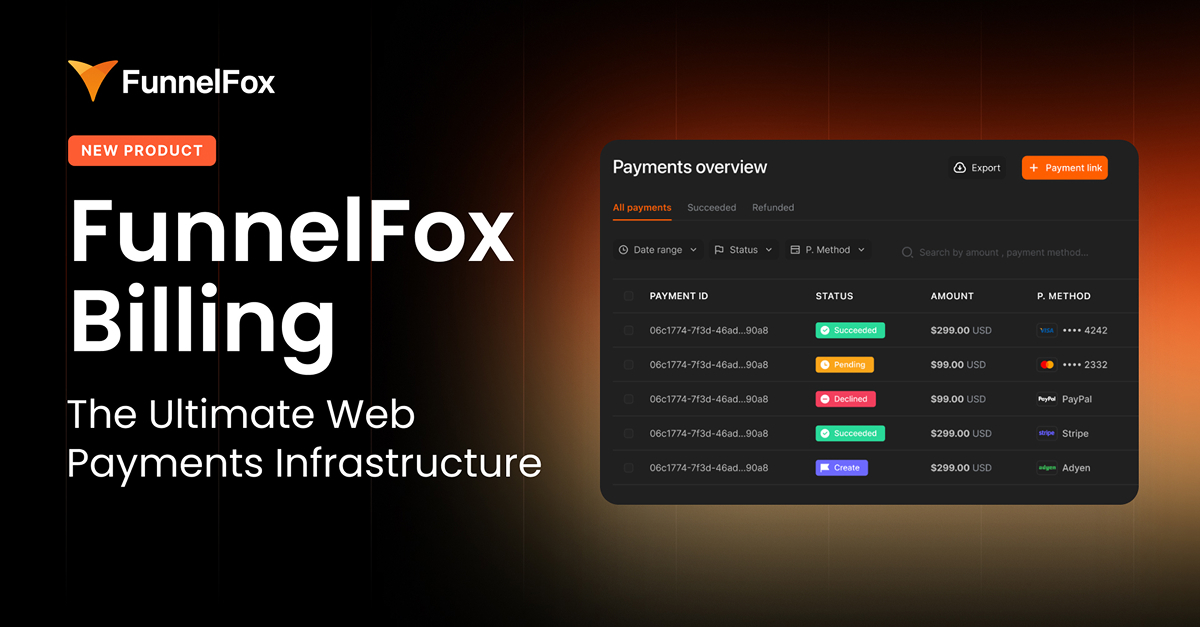

The second rule: don’t overcomplicate tooling. Building a full experimentation platform in-house can take years. Chess.com has invested five years and a dedicated team into their internal system — a huge effort. For most companies, the faster route is to use existing solutions. Options include Eppo, VWO, GrowthBook, Optimizely, RevenueCat, Helium, Superwall, and Adapty.

Finally, experiments should start small and focused. Early tests work best when they isolate one variable — like CTA copy or the order of pricing tiers on a paywall. Testing too many changes at once makes it impossible to know what actually drove results. Iteration is where momentum comes from: answer one question, move to the next, and build learnings step by step.

Wrapping up

A/B testing isn’t about chasing quick wins. It’s about building the muscle to run disciplined experiments, choose the right metrics, and learn fast without getting fooled by noise.

The big lessons from this session:

- Don’t call tests early — novelty fades.

- Anchor on one primary metric, protect your guardrails.

- Scale volume without losing focus.

- Use AI where it cuts time, but keep humans on strategy.

Most teams overcomplicate or undercommit. The ones that win treat experimentation as a core growth engine, not a side project.